Your prompts are using 4x more tokens than you need

A deep-dive into how to use type-definitions instead of json schemas in prompt engineering to improve accuracy and reduce costs

Aaron Villalpando

LinkedInIf you're doing extraction / classification tasks with LLMs you're most likely using Pydantic or Zod (or a library that uses them, like Langchain, Marvin, Instructor) to generate json schemas and injecting them into the prompt or using function calling.

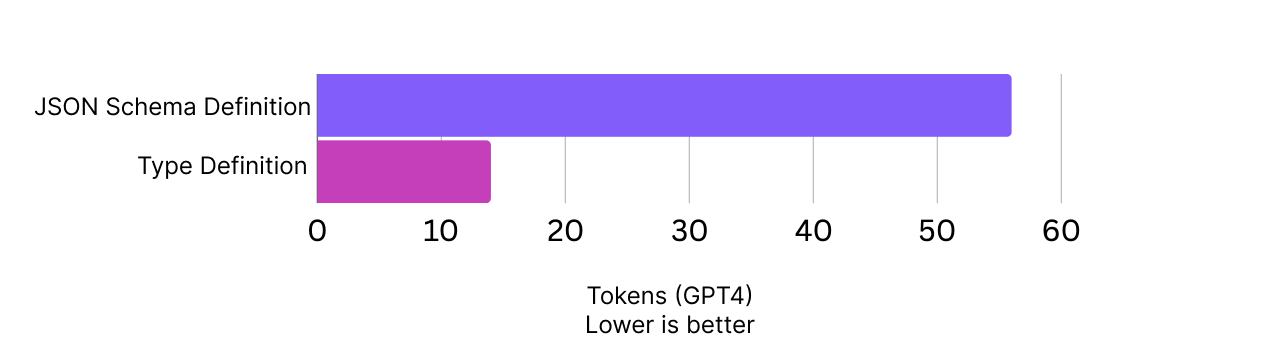

We propose using type-definitions, not JSON schemas, in your LLM prompts. Type-definitions use 60% less tokens than JSON schemas, with no loss of information. Less tokens is a feature, not a bug, that leads to better cost, latency and accuracy.

Before explaining our perspective into the transformer attention mechanism and why type-definitions work better for them, lets look at a few prompts using JSON-schemas vs type-definitions.

Overview

> What are type-definitions?

Type-definitions are closer to typescript interface definitions for representing the data-model rather than a full blown JSON schema. Let's actually do a two extraction tasks to show you what we mean.

Example 1: Comparing type-defintions to JSON Schema

We'll write a prompt to extract the following OrderInfo from a chunk of text using an LLM.

class OrderInfo(BaseModel):

id: str

price: int # in centsUsing JSON Schema

Here's the prompt generated using the Instructor Library to do the extraction.

We use <system> and <user> to denote chat messages for brevity. No additional formatting was added, and the input text has been omitted.

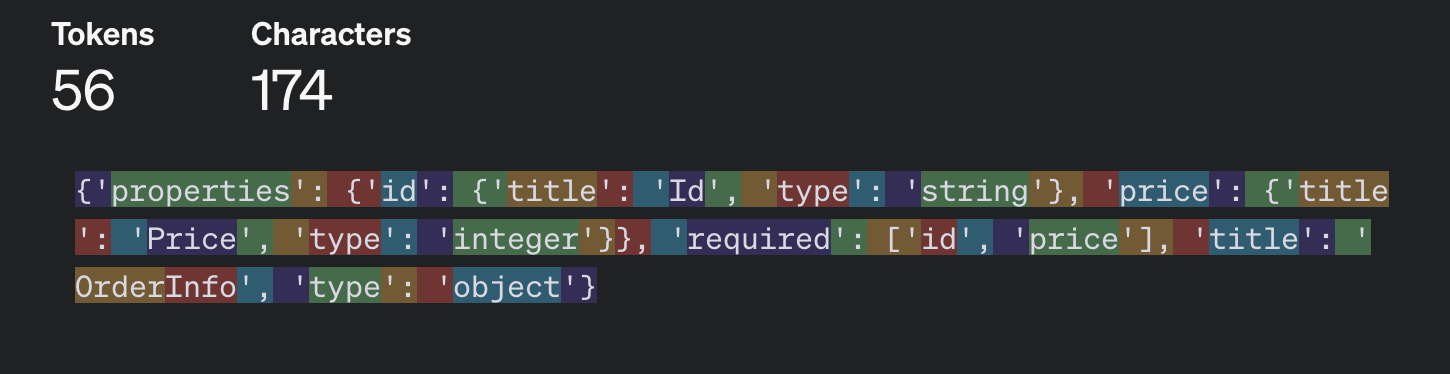

<system>As a genius expert, your task is to understand the content and provide the parsed objects in json that match the following json_schema: {'properties': {'id': {'title': 'Id', 'type': 'string'}, 'price': {'title': 'Price', 'type': 'integer'}}, 'required': ['id', 'price'], 'title': 'OrderInfo', 'type': 'object'}

Make sure to return an instance of the JSON, not the schema itself

<user>{input}

<user>Return the correct JSON response within a ```json codeblock. not the JSON_SCHEMA'}]JSON Schema tokens: 56

Note: we explicitly excluded the remainder of the prompt template in the count, as we are only talking about the actual schema. Most libraries and frameworks add even more boilerplate that actually makes it harder for the model to understand your task - but more on this later.

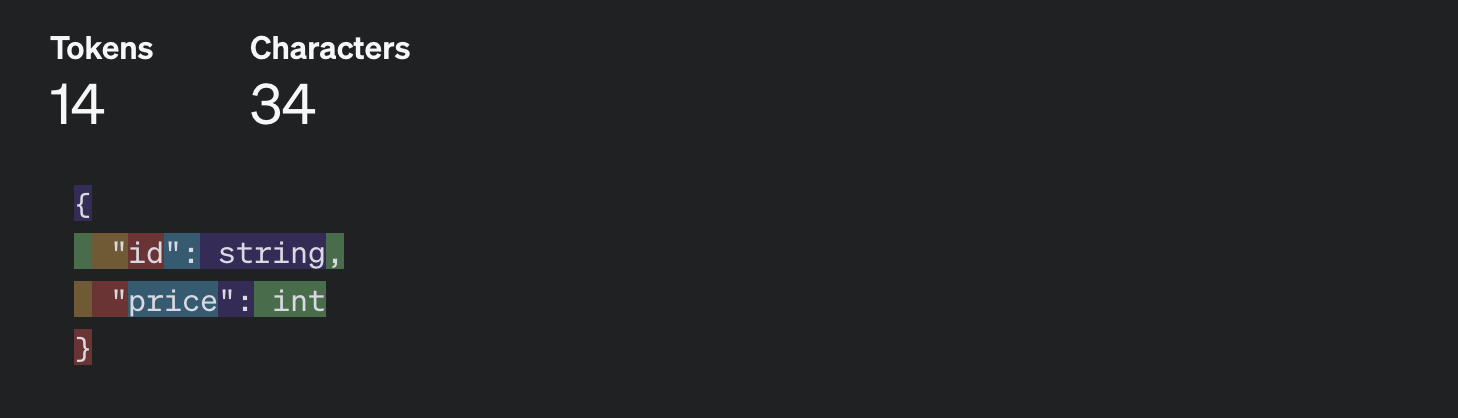

Using type definition

This prompt yields the same results, with a 4x smaller schema size.

<system>Extract the following information from the text.

{input}

---

Return the information in JSON following this schema:

{

"id": string,

"price": int

}

JSON:Type-definition tokens: 14 (4x reduction)

First test case results:

Example 2: A Complex object, with descriptions and enums

Now we'll look at getting this object from the LLM — it's still OrderInfo, but with more metadata. Here's the python models:

class Item(BaseModel):

name: str

quantity: int

class State(Enum):

WASHINGTON = "WASHINGTON"

CALIFORNIA = "CALIFORNIA"

OREGON = "OREGON"

class Address(BaseModel):

street: Optional[str]

city: Optional[str] = Field(description="The city name in lowercase")

state: Optional[State] = Field(description="The state abbreviation from the predefined states")

zip_code: Optional[str]

class OrderInfo(BaseModel):

id: str = Field(alias="order_id")

price: Optional[int] = Field(alias="total_price", description="The total price. Don't include shipping costs.")

items: List[Item] = Field(description="purchased_items")

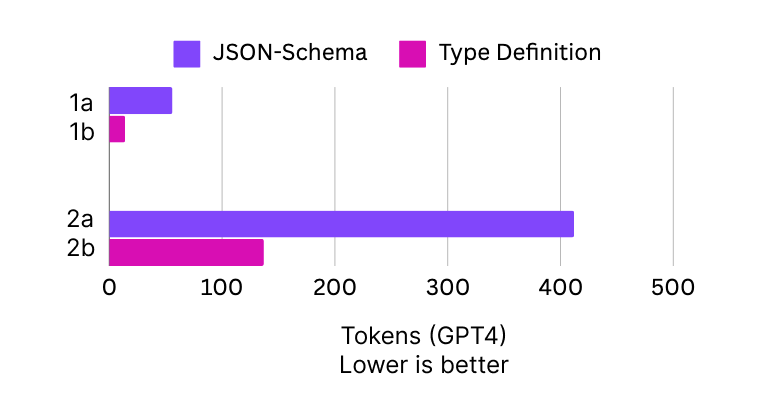

shipping_address: Optional[Address]The new schema uses a lot more tokens now -- see 2a vs 2b:

Using JSON Schema (using Instructor)

Raw prompt, no extra formatting added. Note how large this prompt becomes: even if you were to pretty print it, it would take some careful reading to derive the original data models.

Full prompt

<system>As a genius expert, your task is to understand the content and provide the parsed objects in json that match the following json_schema:

{"$defs": {"Address": {"properties": {"street": {"anyOf": [{"type": "string"}, {"type": "null"}], "title": "Street"}, "city": {"anyOf": [{"type": "string"}, {"type": "null"}], "description": "The city name in lowercase", "title": "City"}, "state": {"anyOf": [{"$ref": "#/$defs/State"}, {"type": "null"}], "description": "The state abbreviation from the predefined states"}, "zip_code": {"anyOf": [{"type": "string"}, {"type": "null"}], "title": "Zip Code"}}, "required": ["street", "city", "state", "zip_code"], "title": "Address", "type": "object"}, "Item": {"properties": {"name": {"title": "Name", "type": "string"}, "quantity": {"title": "Quantity", "type": "integer"}}, "required": ["name", "quantity"], "title": "Item", "type": "object"}, "State": {"enum": ["WASHINGTON", "CALIFORNIA", "OREGON"], "title": "State", "type": "string"}}, "properties": {"order_id": {"title": "Order Id", "type": "string"}, "total_price": {"anyOf": [{"type": "integer"}, {"type": "null"}], "description": "The total price. Don't include shipping costs.", "title": "Total Price"}, "items": {"description": "purchased_items", "items": {"$ref": "#/$defs/Item"}, "title": "Items", "type": "array"}, "shipping_address": {"anyOf": [{"$ref": "#/$defs/Address"}, {"type": "null"}]}}, "required": ["order_id", "total_price", "items", "shipping_address"], "title": "OrderInfo3", "type": "object"}

<user> {input},

<user> Return the correct JSON response within a ```json codeblock. not the JSON_SCHEMA'}]

Using type-definitions

Anyone could skim this type-definition, and derive the original data models.

Full prompt

Extract the following information from the text.

{input}

---

Return the information in JSON following this schema:

{

"order_id": string,

// The total price. Don't include shipping costs.

"total_price": int | null,

"purchased_items": {

"name": string,

"quantity": int

}[],

"shipping_address": {

"street": string | null,

// The city name in lowercase

"city": string | null,

// The state abbreviation from the predefined states

"state": "States as string" | null,

"zip_code": string | null

} | null

}

Use these US States only:

States

---

WASHINGTON

OREGON

CALIFORNIA

JSON:

That's now massive 66% cost savings for that part of your prompt, for the same performance on the extraction task.

On GPT4, thats the difference between making 400 API calls with $1, vs making 1600.

Also note that the prompt is now more readable, and can be debugged for potential issues (e.g if you made a mistake in one of the descriptions).

If you'd like to try out type-definition prompting, we have incorporated it into our DSL — called BAML. BAML helps you get structured data from LLMs using type-definitions and natural language. BAML prompt files have a markdown-like preview of the full-prompt — so you always see the prompt before you send it to the LLM — and comes with an integrated VSCode LLM Playground for testing. BAML works seamlessly with Python and TypeScript.

> Improving quality using type-definitions

Now on to measuring accuracy...

Have you ever had the LLM spit out the JSON schema back out to you instead of giving you the actual value?

Instead of

{ "name": "John Doe" }

you get something like (note, this is still valid json, just not the right json):

{

"title": "Name",

"type": "string",

"value": "John Doe"

}

GPT4 usually does fine on small JSON schemas, but can suffer from this when your schema gets more complex. On less capable models, this problem occurs more often, even with simple schemas.

The LLama 7B test

We tested Llama2 on these different formats, and observed a 6% failure rate (out of 100 tests) in generating the correct output type using json-schemas but 0% failure rate with type-definitions.

The JSON schema test fails even when we prompt it Don't return the json schema[1]. We have open-sourced our test results of JSON Schema (using Instructor) vs BAML here.

[1] Funnily enough LLMs are horrile at what-not-to-do type instructions, but we'll cover why in the future when we talk about bias.

This test uses the same OrderInfo schemas as before. We prompt Llama2 7B with an input text describing a list of items purchased:

Customer recently completed a purchase with order ID "ORD1234567". This transaction, totaling $85 without accounting for shipping costs, included an eclectic mix of items"...

<omitted for brevity>

The expected JSON output looks like this:

{

"order_id": "ORD1234567",

"total_price": 85,

"purchased_items": [...]

}

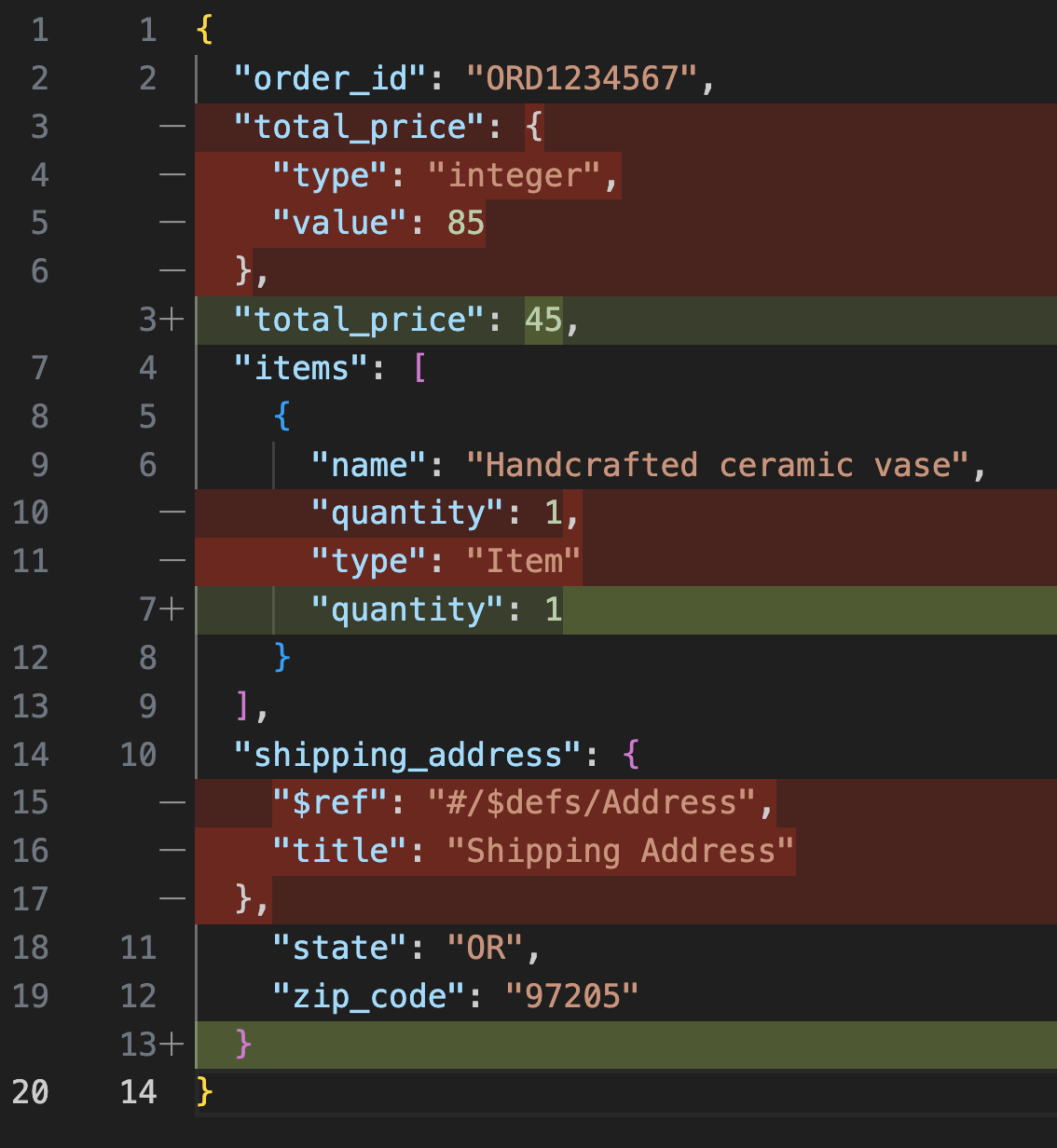

Llama2 Output - Using JSON Schema definition in the prompt

LLM Raw Text Ouput

{

"order_id": "ORD1234567",

"total_price": {

"type": "integer",

"value": 85

},

"items": [

{

"name": "Handcrafted ceramic vase",

"quantity": 1,

"type": "Item"

}

],

"shipping_address": {

"$ref": "#/$defs/Address",

"title": "Shipping Address"

},

"state": "OR",

"zip_code": "97205"

}

It's all valid JSON, but it's the incorrect schema. Here's the diff:

Fatal Issues

total_priceshould just be85, not have a type and value.shipping_addressrefers to the JSON schema model definition, instead of adding the actual shipping address.- The fields

stateandzip_codeshould be part of theshipping_addressblock.

Non-fatal issues

- each value in

itemshas an extra field"type": "Item"

Llama2 Output - Using type-definitions in prompt

LLM Raw Text Ouput

{

"id": "ORD1234567",

"price": 85,

"items": [

{

"name": "handcrafted ceramic vase",

"quantity": 1

},

{

"name": "artisanal kitchen knives",

"quantity": 2

},

{

"name": "vintage-inspired wall clock",

"quantity": 1

}

],

"shipping_address": {

"street": "456 Artisan Way",

"city": "portland",

"state": "OR",

"zip_code": "97205"

}

}

The answer was correct 100% of the time (out of 100 tests).

All we did was change how we defined the schema.

Fewer tokens led to a quality improvement, not degradation. Let's figure out why this is the case.

> Why type-definition prompting works better (or at least our thoughts)

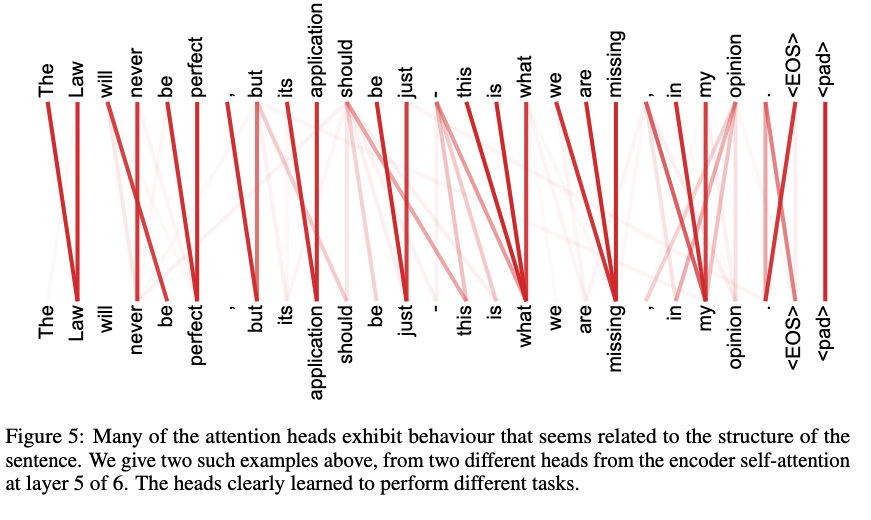

We must first look into how the Transformer architecture works. If you're not familiar, Transformers - what LLMs are built on - use a mechanism called "attention" that looks at different parts of the input as the text is being generated.

Expand this for the full explanation

This means the model can decide which words are most relevant to the current word it's considering, allowing it to understand context and relationships within the text. Note we say "word" but really it's "tokens", more on this later. A model has several attention-heads doing this same process, but each sees a different view in the embedding space that affects how strong two tokens are associated. One head may be looking for structural similarity between words, and another may be looking for meanings between words for example. It's much more complicated than this, but it's a 10,000 foot view.

Here is a visualization from the Attention Is All You Need Paper) of an attention head looking at the structure of a sentence:

As a general rule of thumb, the more tokens a model needs to understand to generate the each subsequent token, the harder it is for the model to be correct. Here's our 3 insights as to why type-definitions work better, not in spite of, but thanks to fewer tokens.

1. A type-definition is a lossless compression of JSON Schema

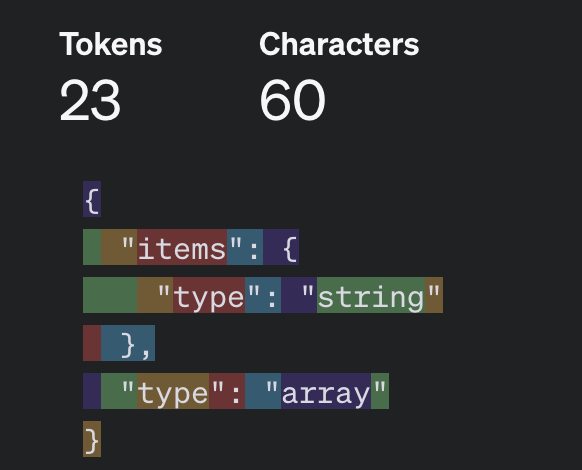

Lets say you wanted to output an array of strings. With the JSON schema approach you would have:

{

"items": {

"type": "string"

},

"type": "array"

}

In order for the model to understand what you want, it has to process 23 tokens, and then build the appropriate nested relationships between them.

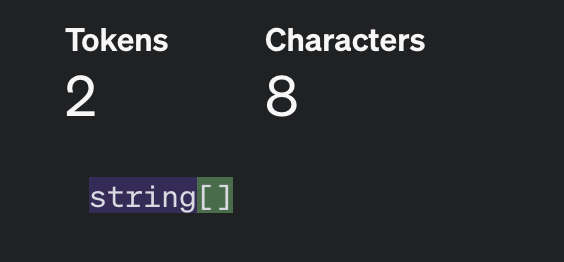

In the type-definition approach:

string[]

Only, 2 tokens have to be processed.

Less tokens, means less relationships are required. The model can spend its compute power on performing your task instead of understanding your output format.

2. LLM Tokenizers are already optimized for type-definitions

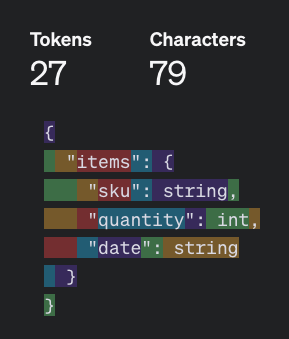

What's really interesting is that the tokenization strategy most LLMs use is actually already optimized for type-definitions. Lets take the type-definition:

{

"items": {

"sku": string,

"quantity": int,

"date": string

}

}

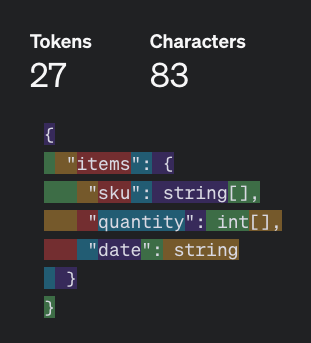

If you wanted sku and quantity to both be arrays, you would spend 0 extra tokens!

{

"items": {

"sku": string[],

"quantity": int[],

"date": string

}

}

This is because the token ,\n (token id 345) is just replaced with the token [],\n (token id 46749).

The token vocabulary of LLMs already has many special tokens to represent complex object (like

[],or?,or[]}).

3. The distance between any two related tokens is shorter

Another key aspect of type-defintions is their ability to naturally keep the average distance between any two related tokens short. Relationships between words that are far apart in a sentence are more complex to understand than relationships between words adjacent to each other.

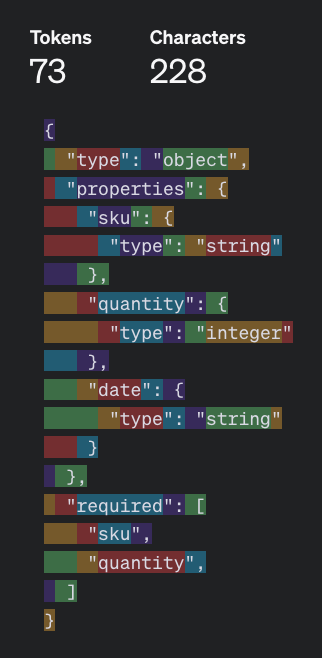

For example, take the idea of required fields. With JSON schemas you would have:

{

"type": "object",

"properties": {

"sku": {

"type": "string"

},

"quantity": {

"type": "integer"

},

"date": {

"type": "string"

}

},

"required": [

"sku",

"quantity",

]

}

The distance between the property sku 's defintion (token range 13-27), and the concept of the property sku being required (token range 59 - 67), is 40 tokens.

Further, the only thing that indicates date is not required is the absence of the date token in token range 59-73, where the "required" block is. As your data models get more complex, that delta can easily increase from 40 to hundreds of tokens. The model will have to "hop around" between different parts of the schema as it's generating the output to understand the relationships between the tokens.

In the type-definition world, we can represent the exact same concept with fewer tokens, and each token is more closely relevant to its neighbors. In fact, since we saved on the tokens, we can even add in a quick description for the date field, and still well under the token count.

{

"sku": string,

"quantity": int,

// in ISO format

"date": string?

}Note, now we can get the date in a more standardized format by adding a comment above the date. Coincidentally, this is both more readable for us humans as well!

Keeping the relevant parts of your data model naturally imply a relationship. Inferred relationships that require the LLM to read the entire data model are hard!

Obligatory marketing…

We hope this technique helps you improve your pipelines (and get better cost savings)!

If you're ready to start using type-definitions, migrate to BAML in less than 5 minutes today, and give us a Star!

BAML comes with:

- Type-definition prompting built in

- Live prompt previews (before you call the LLM)

- A testing playground right in VSCode

- A parser that transforms the LLMs output into your schema - even if the LLM messes up!

If you have any about your schemas feel free to reach out on Discord or email us (contact@boundaryml.com).

Our mission is to build the best developer experience for AI engineers looking to get structured data from LLMs. Subscribe to the newsletter to hear about future posts like this one.

Coming soon:

- Function calling vs JSON mode - why they're different, and why they're not

- JSONish - A new JSON spec + parser built specifically for LLMs