BAML Thoughts

Insights, tutorials, and updates from the BAML team. Stay ahead with the latest in AI development.

Get the latest posts delivered to your inbox

Browse by category

1 co-founder, 5 years, 12 pivots, still not dead

How we navigated 12 pivots without hating each other

Vaibhav Gupta

about 18 hours ago

Structured Outputs Create False Confidence

Constrained decoding seems like the greatest thing since sliced bread, but it often forces models to prioritize output conformance over output quality.

Sam Lijin

17 days ago

LLMs do not understand numbers

Don't ask it to add a confidence score. Don't add it to sum up items on a receipt. Don't ask it to confirm how many rows there are in a PDF.

Sam Lijin

about 1 month ago

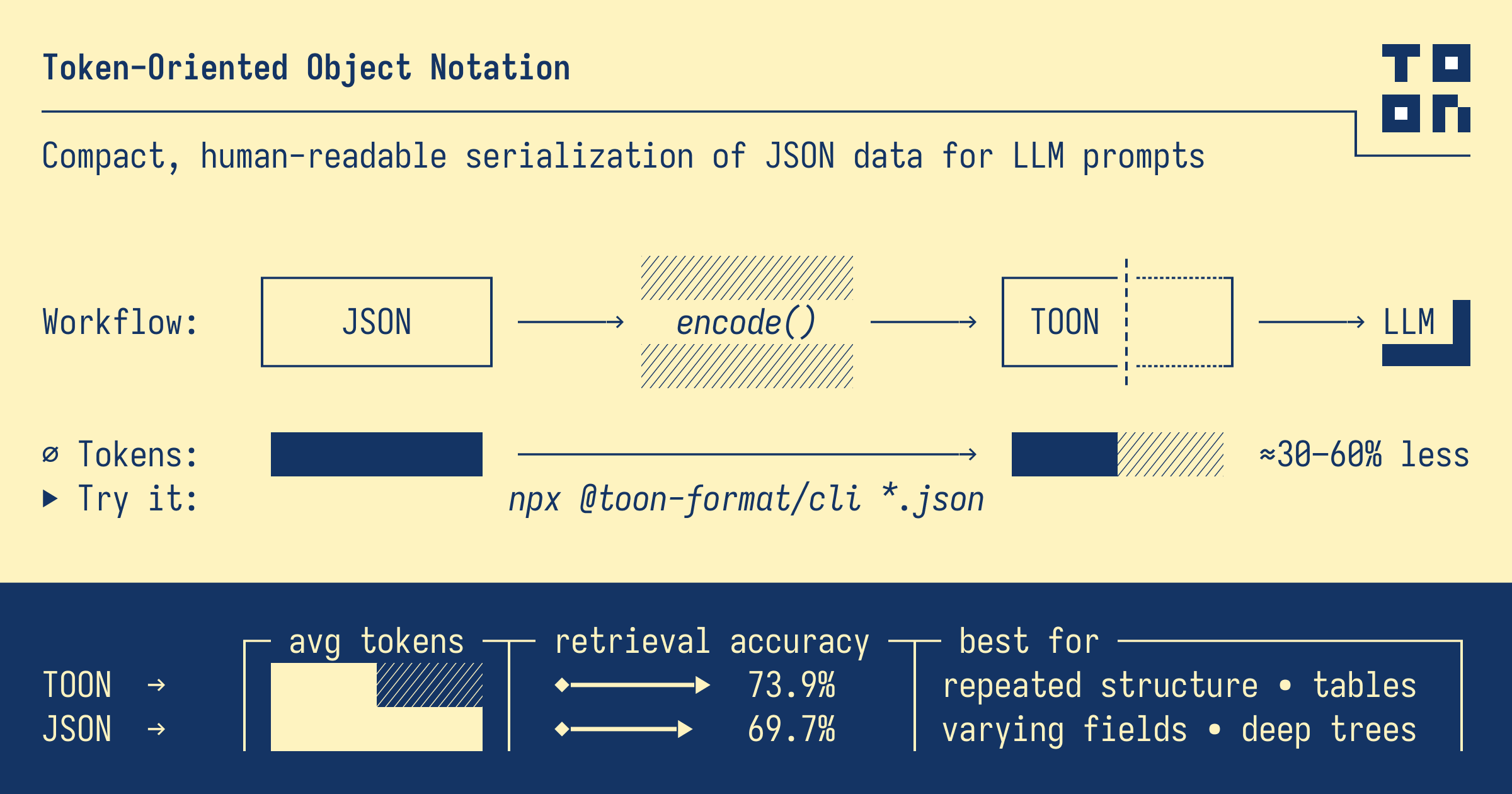

Beware When Using TOON

TOON is a new serialization format, but has many pitfalls.

Sam Lijin

about 1 month ago

A cautionary tale on vibes

Post-mortem of the 0.212.0 timeouts incident

Greg Hale

about 2 months ago

You could invent the next coding agent!

How BAML makes tools calls easy to integrate into apps

Greg Hale

2 months ago

Using UUIDs in prompts is bad

Guidance on dealing with entity IDs in LLM functions

Greg Hale

3 months ago

Advanced Prompting Workshop Notes

Notes from our 2024 Prompting Workshop

Vaibhav Gupta

5 months ago

How to write a Zed extension for a made up language

Exploring the fascinating world of Wasm, Zed extensions and LSP

Egor Lukiyanov

6 months ago

The curious case of environment variables

Environment variables in BAML are not as simple as they seem. It's tricky to pass and read them from the generated languages runtime. This post talks about how we solved this problem by lazily loading them!

Rahul Tiwari

7 months ago

Tech Preview: Workflows

Specify complex workflows directly in BAML

Greg Hale

7 months ago

Lambda the Ultimate AI Agent

A new take on Agentic frameworks

Greg Hale

7 months ago

Tutorial - An Agentic AI App with Streaming

An end-to-end agentic chatbot tutorial for React devs

Greg Hale

8 months ago

Tool use with Llama API (and reasoning)

How to do tool-calling or function-calling with Llama API (with reasoning)

Vaibhav Gupta

8 months ago

Structured outputs with Llama 4

How to do tool-calling or function-calling with Llama 4

Vaibhav Gupta

9 months ago

Structured outputs with QwQ 32B

How to use QwQ 32B to do function calling or tool calls

Aaron Villalpando

10 months ago

Full stack BAML with React/Next.js

Auto generated React hooks for your BAML functions

Chris Watts

11 months ago

Structured outputs with Gemini 2.0

How to do tool-calling or function-calling with Gemini 2.0

Aaron Villalpando

11 months ago

Structured outputs with o3-mini

How to do tool-calling or function-calling with o3-mini

Vaibhav Gupta

11 months ago

BAML Launch Week Day 5

Roadmap to BAML 1.0

Vaibhav Gupta

11 months ago

BAML Launch Week Day 4

Semantic Streaming

Greg Hale

11 months ago

BAML Launch Week Day 3

Type System

Antonio Sarosi

11 months ago

BAML Launch Week Day 2

BAML Chat

Sam Lijin

11 months ago

BAML Launch Week Day 1

VS Code LLM Playground 2.0

Aaron Villalpando

11 months ago

AI Agents Need a New Syntax

A proposal for a new way to build AI applications and agents

Vaibhav Gupta

11 months ago

BAML Launch Week Announcement

Join us on January 27-31

Vaibhav Gupta

11 months ago

Structured outputs with Deepseek R1

How to do tool-calling or function-calling with Deepseek R1

Vaibhav Gupta

11 months ago

Cursor support for BAML

BAML is now supported in Cursor

Sam Lijin

about 1 year ago

Structured outputs with Open AI O1

How to use Open AI O1 to do function calling or tool calls

Vaibhav Gupta

about 1 year ago

A new trick for generating code in JSON

BAML now supports parsing triple-backtick code blocks in LLM outputs

Sam Lijin

about 1 year ago

Announcing LLM Eval Support for Python, Ruby, Typescript, Go, and more.

Use BAML to evaluate your LLM applications regardless of the language you use to call them

Greg Hale

about 1 year ago

Generating Structured Output from a Dynamic JSON schema

Modify LLM response models at runtime.

Aaron Villalpando

about 1 year ago

Every Way To Get Structured Output From LLMs

A survey of every framework for extracting structured output from LLMs, and how they compare.

Sam Lijin

about 1 year ago

Semantic Streaming vs Token-based Streaming

A new technique for streaming structured output from LLMs

Aaron Villalpando

about 1 year ago

Bringing Structured Outputs and Schema-Aligned Parsing to Golang, Java, PHP, Ruby, Rust, and More

BAML now integrates with OpenAPI, allowing you to call BAML functions from any language.

Sam Lijin

over 1 year ago

Structured Output with Ollama

Getting structured output out of Ollama, using novel parsing techniques.

Sam Lijin

over 1 year ago

Beating OpenAI's structured outputs on cost, accuracy and speed — An interactive deep-dive

We leveraged a novel technique, schema-aligned parsing, to achieve SOTA on BFCL with every LLM.

Vaibhav Gupta

over 1 year ago

Transparency as a Tenet

Exposing the inner workings of BAML

Anish Palakurthi

over 1 year ago

Building a New Programming Language in 2024, pt. 1

An overview of the work that goes into building a new programming language.

Sam Lijin

over 1 year ago

Use Audio with your LLMs!

Capturing Non-Text Information and Richer Context with LLMs

Anish Palakurthi

over 1 year ago

Announcing Gemini Support!

Applying structure to Gemini output with BAML

Anish Palakurthi

over 1 year ago

Building RAG in Ruby, using BAML, with streaming!

How to do RAG with Ruby streaming AI APIs

Sam Lijin

over 1 year ago

Build RAG with citations in NextJS (with streaming!)

How to do RAG with NextJS streaming AI APIs

Aaron Villalpando

over 1 year ago

Your prompts are using 4x more tokens than you need

A deep-dive into how to use type-definitions instead of json schemas in prompt engineering to improve accuracy and reduce costs

Aaron Villalpando

over 1 year ago

Announcing BAML - The typesafe interface to LLMs, with built-in testing, guardrails and observability

BAML is a lightweight programming language to help perform structured prompting in a typesafe way.

Vaibhav Gupta

about 2 years ago