Structured Outputs Create False Confidence

Constrained decoding seems like the greatest thing since sliced bread, but it often forces models to prioritize output conformance over output quality.

Sam Lijin

Update (Dec 21): this post is now on the Hacker News front page! We've updated this post to be more precise about our claims and have also added some clarifications at the end. You can see the original version of this post here.

If you use LLMs, you've probably heard about structured outputs. You might think they're the greatest thing since sliced bread. Unfortunately, structured outputs also often degrade response quality.

Specifically, if you use an LLM provider's structured outputs API, you're likely to get a lower quality response than if you use their normal text output API:

- ⚠️ you're more likely to make mistakes when extracting data, even in simple cases;

- ⚠️ you're probably not modeling errors correctly;

- ⚠️ it's harder to use techniques like chain-of-thought reasoning; and

- ⚠️ in the extreme case, it can be easier to steal your customer data using prompt injection.

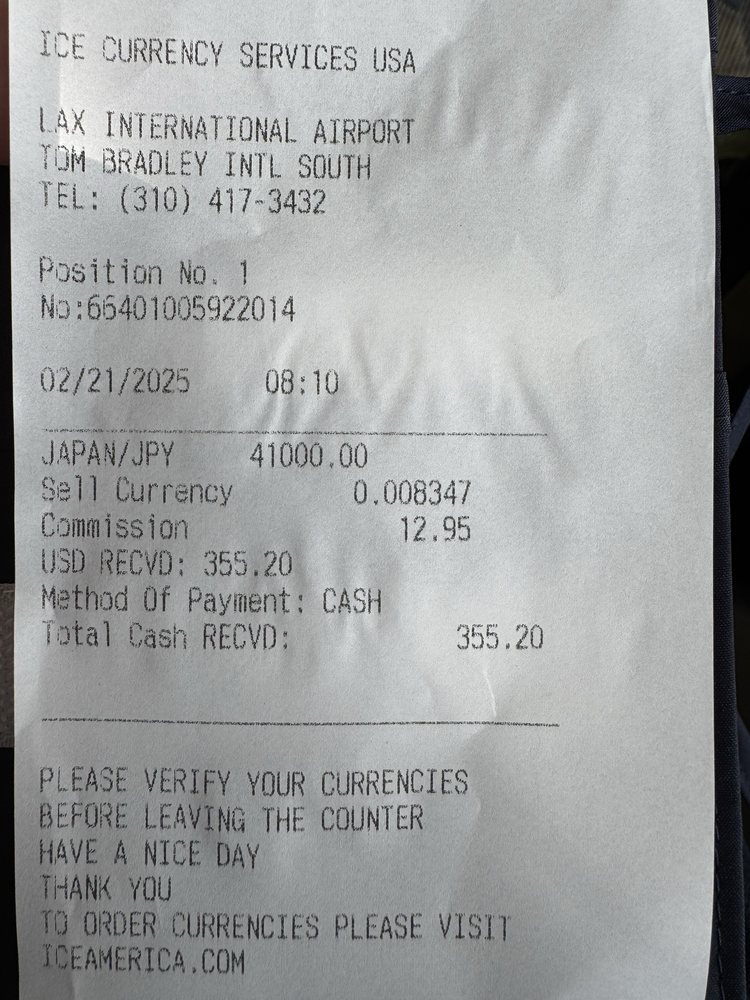

These are very contentious claims, so let's start with an example: extracting data from a receipt.

If I use an LLM to extract the receipt entries, it should be able to tell me that one of the items is (name="banana", quantity=0.46), right?

Well, using OpenAI's structured outputs API with gpt-5.2 - released literally this week! - it will claim that the banana quantity is 1.0:

{

"establishment_name": "PC Market of Choice",

"date": "2007-01-20",

"total": 0.32,

"currency": "USD",

"items": [

{

"name": "Bananas",

"price": 0.32,

"quantity": 1

}

]

}

However, with the same model, if you just use the completions API and then parse the output, it will return the correct quantity:

{

"establishment_name": "PC Market of Choice",

"date": "2007-01-20",

"total": 0.32,

"currency": "USD",

"items": [

{

"name": "Bananas",

"price": 0.69,

"quantity": 0.46

}

]

}

Click here to see the code that was used to generate the above outputs.

This code is also available on GitHub.

#!/usr/bin/env -S uv run

# /// script

# requires-python = ">=3.10"

# dependencies = ["openai", "pydantic", "rich"]

# ///

"""

If you have uv, you can run this code by saving it as structured_outputs_quality_demo.py and then running:

chmod u+x structured_outputs_quality_demo.py

./structured_outputs_quality_demo.py

This script is a companion to https://boundaryml.com/blog/structured-outputs-create-false-confidence

"""

import json

import re

from openai import OpenAI

from pydantic import BaseModel, Field

from rich.console import Console

from rich.pretty import Pretty

class Item(BaseModel):

name: str

price: float = Field(description="per-unit item price")

quantity: float = Field(default=1, description="If not specified, assume 1")

class Receipt(BaseModel):

establishment_name: str

date: str = Field(description="YYYY-MM-DD")

total: float = Field(description="The total amount of the receipt")

currency: str = Field(description="The currency used for everything on the receipt")

items: list[Item] = Field(description="The items on the receipt")

client = OpenAI()

console = Console()

def run_receipt_extraction_structured(image_url: str):

"""Call the LLM to extract receipt data from an image URL and return the raw response."""

prompt_text = (

"""

Extract data from the receipt.

"""

)

response = client.beta.chat.completions.parse(

model="gpt-5.2-2025-12-11",

messages=[

{

"role": "system",

"content": "You are a precise receipt extraction engine. Return only structured data matching the Receipt schema.",

},

{

"role": "user",

"content": [

{

"type": "text",

"text": prompt_text,

},

{"type": "image_url", "image_url": {"url": image_url}},

],

},

],

response_format=Receipt,

)

return response.choices[0].message.content, response.choices[0].message.parsed

def run_receipt_extraction_freeform(image_url: str):

"""Call the LLM to extract receipt data from an image URL and return the raw response."""

prompt_text = (

"""

Extract data from the receipt.

Explain your reasoning, then answer in JSON:

{

establishment_name: string,

// YYYY-MM-DD

date: string,

// The total amount of the receipt

total: float,

// The currency used for everything on the receipt

currency: string,

// The items on the receipt

items: [

{

name: string,

// per-unit item price

price: float,

// If not specified, assume 1

quantity: float,

}

],

}

"""

)

response = client.beta.chat.completions.parse(

model="gpt-5.2-2025-12-11",

messages=[

{

"role": "user",

"content": [

{

"type": "text",

"text": prompt_text,

},

{"type": "image_url", "image_url": {"url": image_url}},

],

},

],

)

return response.choices[0].message.content, json.loads(re.search(r"```json(.*?)```", response.choices[0].message.content, flags=re.DOTALL).group(1))

def main() -> None:

images = [

{

"title": "Parsing receipt: fractional quantity",

"url": "https://boundaryml.com/receipt-fractional-quantity.jpg",

"expected": "You should expect quantity to be 0.46."

},

{

"title": "Parsing receipt: elephant",

"url": "https://boundaryml.com/receipt-elephant.jpg",

"expected": "You should expect an error."

},

{

"title": "Parsing receipt: currency exchange",

"url": "https://boundaryml.com/receipt-currency-exchange.jpg",

"expected": "You should expect a warning about mixed currencies."

},

]

print("This is a demonstration of how structured outputs create false confidence.")

for entry in images:

title = entry["title"]

url = entry["url"]

completion_structured_content, _ = run_receipt_extraction_structured(url)

completion_freeform_content, _ = run_receipt_extraction_freeform(url)

console.print("[cyan]--------------------------------[/cyan]")

console.print(f"[cyan]{title}[/cyan]")

console.print(f"Asking LLM to parse receipt from {url}")

console.print(entry['expected'])

console.print()

console.print("[cyan]Using structured outputs:[/cyan]")

console.print(completion_structured_content)

console.print()

console.print("[cyan]Parsing free-form output:[/cyan]")

console.print(completion_freeform_content)

if __name__ == "__main__":

main()

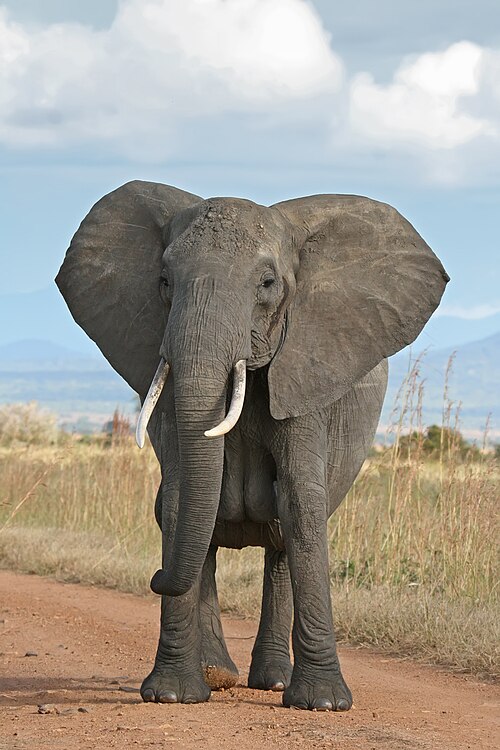

Now, what happens if someone submits a picture of an elephant?

Or a currency exchange receipt?

In these scenarios, you want to let the LLM respond using text. You want it to be able to say that, hey, you're asking me to parse a receipt, but you gave me a picture of an elephant, I can't parse an elephant into a receipt.

If you force the LLM to respond using structured outputs, you take that ability away from the LLM. Sure, you'll get an object that satisfies your output format, but it'll be meaningless. It's like when you file a bug report, and the form has 5 mandatory fields about things that have nothing to do with your bug, but you have to put something in those fields to file the bug report: the stuff you put in those fields will probably be useless.

I can design my output format better!

Yes and no.

Yes, you can tell your LLM to return { receipt data } or { error } . But what kinds of errors are you going to ask it to consider?

- What kind of error should it return if there's no

totallisted on the receipt? Should it even return an error or is it OK for it to returntotal = null? - What if it can successfully parse 7 of 8 items on the receipt, but it's not sure about the 8th item? Should it return (1) the 7 successfully parsed items and a partial parse of the 8th item, (2) only the 7 successfully parsed items and discard the 8th or (3) fail parsing entirely?

- What if someone submits a picture of an elephant? What kind of error should be returned in that case?

In addition, as you start enumerating all of these errors, you run into the pink elephant problem: the more your prompt talks about errors, the more likely the LLM is to respond with an error.

Think of it this way: if someone presses Ctrl-C when running your binary, it is a Good Thing that

the error can propagate all the way up through your binary, without you having to explicitly write try { ... } catch CtrlCError { ... } in every function in your codebase.

In the same way that you often want to allow errors to just propagate up while writing software, and only explicitly handle some errors, your LLM should be allowed to respond with errors in whatever fashion it wants to.

Chain-of-thought is crippled by structured outputs

"Explain your reasoning step by step" is a magic incantation that seemingly makes LLMs much smarter. It also turns out that this trick doesn't work nearly as well when using structured outputs, and we've known this since Aug 2024 (edit: it turns out the "Let Me Speak Freely" paper is fundamentally flawed, but we do still believe the claim to be true).

To understand this finding, the intuition I like to use, is to think of every model of having an intelligence "budget", and that if you try to force an LLM to reason in a very specific format, you're making the LLM spend intelligence points on useless work.

To make this more concrete, let's use another example. If you prompt an LLM to give you JSON output and reason about it step-by-step, its response will look something like this:

If we think step by step we can see that:

1. The email is from Amazon, confirming the status of a specific order.

2. The subject line says "Your Amazon.com order of 'Wood Dowel Rods...' has shipped!" which indicates that the order status is 'SHIPPED'.

3. [...]

Combining all these points, the output JSON is:

```json

{

"order_status": "SHIPPED",

[...]

}

```

Notice that although the response contains valid JSON, the response itself is not valid JSON, because of the reasoning text at the start. In other words, you can't use basic chain-of-thought reasoning with structured outputs.

You could modify your schema, and add reasoning: string fields to your output schema, and let the LLM respond with something like this:

{

"reasoning": "If we think step by step we can see that:\n\n 1. The email is from Amazon, confirming the status of a specific order.\n2. The subject line says \"Your Amazon.com order of 'Wood Dowel Rods...' has shipped!\" [...]

...

}

In other words, if you're using a reasoning field with structured outputs, instead of simply asking the LLM to reason about its answer, you're also forcing it to escape newlines and quotes and format that correctly as JSON. You're basically asking the LLM to put a cover page on its TPS report.

Why are structured outputs often worse?

(To understand this section, you'll need a bit of background on transformer models, specifically how logit sampling works. Feel free to skip this section if you don't have this background.)

Model providers like OpenAI and Anthropic implement structured outputs using a technique called constrained decoding:

By default, when models are sampled to produce outputs, they are entirely unconstrained and can select any token from the vocabulary as the next output. This flexibility is what allows models to make mistakes; for example, they are generally free to sample a curly brace token at any time, even when that would not produce valid JSON. In order to force valid outputs, we constrain our models to only tokens that would be valid according to the supplied schema, rather than all available tokens.

In other words, constrained decoding applies a filter during sampling that says, OK, given the output that you've produced so far, you're only allowed to consider certain tokens.

For example, if the LLM has so far produced {"quantity": 51, and you're constraining output decoding to satisfy { quantity: int, ... }:

{"quantity": 51.7would not satisfy the constraint, so.7is not allowed to be the next token,{"quantity": 51,would satisfy the constraint, so,is allowed to be the next token,{"quantity": 510would satisfy the constraint, so0is allowed to be the next token (albeit, in this example, with low probability!),

But if the LLM actually wants to answer with 51.7 instead of 51, it isn't allowed to, because of our constraint! (Also, 51 is less correct than 52 in this scenario.)

Sure, if you're using constrained decoding to force it to return {"quantity": 51.7} instead of {"quantity": 51.7,} - because trailing commas are not allowed in JSON - it'll probably do the right thing. But that's something you can write code to handle, which leads me to my final point.

Just parse the output

OK, so if structured outputs are bad, then what's the solution?

It turns out to be really simple: let the LLM do what it's trained to do. Allow it to respond in a free-form style:

- let it refuse to count the number of entries in a list

- let it warn you when you've given it contradictory information

- let it tell you the correct approach when you inadvertently ask it to use the wrong approach

Using structured outputs, via constrained decoding, makes it much harder for the LLM to do any of this. Even though you've crafted a guarantee that the LLM will return a response in exactly your requested output format, that guarantee comes at the cost of the quality of that response, because you're forcing the LLM to prioritize complying with your output format over returning a high-quality response. That's why structured outputs create false confidence: it's entirely non-obvious that you're sacrificing output quality to achieve output conformance.

Parsing the LLM's free-form output, by contrast, enables you to retain that output quality. In fact, last year we demonstrated that using this technique, not only could you outperform constrained decoding, but you could also make gpt-4o-mini outperform baseline gpt-4o (constrained decoding at the time was described as "function calling (strict)").

(In a scenario where an attacker is trying to convince your agent to do something you didn't design it to do, the parsing also serves as an effective defense-in-depth layer against malicious prompt injection.)

Doing this parsing effectively, though, is rather involved:

- you need a way to embed the output format in the prompt, preferably something less verbose than JSON schema;

- you need a parser that can find JSON in your output and, when working with non-frontier models, can handle unquoted strings, key-value pairs without comma delimiters, unescaped quotes and newlines; and

- you need a parser that can coerce the JSON into your output schema, if the model, say, returns a float where you wanted an int, or a

stringwhere you wanted astring[].

It turns out that not only are these individually pretty hard problems to solve, but it's also really hard to wrap these in an ergonomic API. That's how we ended up with the implementation of schema-aligned parsing that we've made available in BAML, our open-source, local-only DSL.

Clarifications

In response to a lot of the comments we've gotten so far:

To be more precise about my claim, it's not that constrained decoding always gives definitively bad outputs (I went too clickbait with my original tagline, I admit it), so much as that it's really easy, with constrained decoding, to create the illusion that you're getting good responses.

Because it forces the LLM to prioritize conforming to your output schema (and in particular forces conforming to JSON), without giving the LLM an escape hatch, it's really easy when using constrained decoding to shift errors from very visible "JSON.parse failed" quantitative errors to very subtle "my users are complaining that I give them crappy results" quality errors.

(This is a very hard message to convey at the start of an article, and depends on a lot of nuance that needs to first be explained with examples, which is why I wrote it the way I did.)

In response to more specific points:

-

Give me evals.

Here you go: last year on BFCL with

gpt-4owe achieved 93.63% accuracy, vs 91.37% accuracy with constrained decoding (then called "Function Calling (Strict)"). (We should re-run these at some point, but we haven't blocked out the time for it.) -

Can't you just use reasoning models instead of chain-of-thought?

Yes, you can use reasoning models to combine chain-of-thought with constrained decoding, but it requires the model to be explicitly trained with

<analysis>tokens or the equivalent thereof. This is only true of expensive, frontier models (gpt-5, gpt-5-mini, Opus 4.5, Gemini 3) and does not work with gpt-4o-mini, gpt-4.5-mini, nor many of the most commonly used models. -

"Let Me Speak Freely" is a fundamentally flawed paper.

I hadn't seen dottxt's "Say What You Mean" response to the "Let Me Speak Freely" paper before, and their critique of the "Let Me Speak Freely" paper's methodology is entirely valid.

I still believe that combining reasoning with structured outputs can really mess with your final response quality, because putting reasoning fields in JSON forces the model to escape tokens, which as Aider documented last year, causes models to perform substantially worse. Sure, if your reasoning doesn't need to escape text, then this isn't an issue, but now this is another thing that everyone on your team has to remember while they're iterating on prompts.

Thanks all for challenging us on this post: it's super valuable feedback, and we really appreciate the time y'all are taking to read and respond to us.